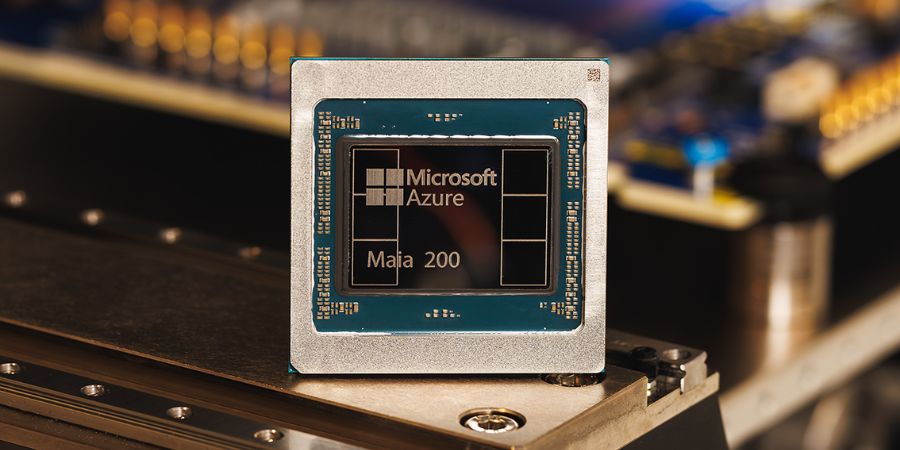

Microsoft launched Maia 200 as a production focused inference accelerator designed to cut inference cost and scale real world AI services. The chip prioritizes sustained throughput and low precision tensor math that inference workloads prefer, delivering raw performance at a power envelope cloud operators can use. The result is hardware that lets teams run high volume conversational agents and generative systems with lower token cost and predictable latency.

Let us break it down.

Maia 200 focuses on inference work instead of training activities. The focus of this study results in decisions that prioritize memory efficiency and throughput performance and fixed precision mathematical calculations.

The primary expense for organizations that handle more than one million queries each day comes from their inference requirements. Maia 200 reduces that cost by delivering high compute for narrow precision formats and by fitting large models onto dedicated silicon designed around real service needs.

Performance and Architecture

The chip delivers more than 10 petaflops of 4 bit performance and roughly 5 petaflops of 8 bit performance. Those numbers translate into sustained token throughput for large models and lower amortized cost per token compared with general purpose GPUs.

The hardware includes over 100 billion transistors, a scale that supports massive on chip resources and high compute density. Those characteristics make Maia 200 especially well suited to inference at scale.

Unlike accelerators that try to be all things to all workloads, Maia 200 specialises. It is built for quantized tensor math, model sharding across nodes, and efficient offload of inference pipelines. The design trade off accepts that high precision training will remain the domain of other hardware while providing a clear advantage where it matters most for production services.

Real World Impact

Teams operating chatbots, virtual assistants, and real time generative features will see two practical benefits.

- First, cost per token falls because the silicon runs narrow precision math more efficiently than broad purpose alternatives.

- Second, predictable latency improves user experience because the hardware is tuned for steady throughput rather than bursty training workloads. Microsoft reports that the chip is already in internal use and that early partners can test workloads via a provided SDK, creating a fast path from prototype to production.

How to Prepare Your Models

Start by profiling models under representative traffic. Quantize models to the narrow precision formats supported by the chip and test inference accuracy against production datasets.

Use the SDK to measure token latency, memory footprint, and throughput. Expect integration work around runtime toolchains and model sharding, but also expect a clear return on engineering time when operating at high scale.

Competitive Context

Maia 200 arrives as hyperscalers invest in first party silicon to reduce dependency on external GPU vendors. It compares directly with other cloud accelerators and is positioned to offer higher inference efficiency for many production workloads.

For customers that run inference at scale, having a dedicated inference option widens deployment choices and strengthens negotiating leverage on cloud compute.

Practical Checklist for Teams

- Identify high volume inference endpoints and measure current cost per token.

- Quantize models and test end to end accuracy against key metrics.

- The SDK enables users to measure throughput and latency performance through testing during peak usage times.

- The project requires development of an integration plan which will connect model serving functions with monitoring systems and fallback operations to different computing resources.

- Compare cost projections with current GPU based stacks at expected traffic levels.

Final Assessment

Maia 200 is a pragmatic step toward separating training and inference economics. It is not a universal replacement for GPUs, but it is a powerful option for teams focused on running large language models and conversational agents in production.

For product leads and infrastructure engineers, the chip is worth testing now as part of a broader strategy to reduce inference cost and improve user facing latency.

Frequently Asked Questions

What is Maia 200?

Maia 200 is Microsoft’s purpose-built inference accelerator designed to run large AI models more efficiently and at lower cost per token than general-purpose GPUs. It is optimized specifically for inference workloads rather than high-precision training.

What are the headline specs I should know?

The chip contains over 100 billion transistors and delivers more than 10 petaFLOPS at FP4 precision and about 5 petaFLOPS at FP8 precision. It pairs large amounts of high-bandwidth memory with substantial on-chip SRAM to reduce memory movement and sustain throughput.

Which manufacturing process is used?

Maia 200 is manufactured on TSMC’s 3-nanometer process, enabling high transistor density and favorable power efficiency for data center deployment.

How does Maia 200 improve economics for inference?

Microsoft reports roughly 30 percent better performance per dollar compared with the latest generation hardware in its fleet. That translates to lower cost per token and better sustained throughput for high-volume applications such as chatbots, assistants, and generative features.

Is Maia 200 meant to replace GPUs?

No. Maia 200 is designed to complement existing hardware. It targets inference and low-precision compute where cost and latency matter most. High-precision training and CUDA-native workflows will still rely on GPUs in many environments.

Which workloads benefit most from Maia 200?

High-volume inference workloads benefit the most. Examples include conversational agents, real-time recommendation systems, generative text and image services, and any endpoint where tokens served per second and predictable latency determine cost and user experience.

How can developers integrate models with Maia 200?

Microsoft is previewing the Maia SDK which includes a Triton compiler, PyTorch support, a simulator, and tools such as a cost calculator to profile token economics. Teams should use the SDK to quantize models to supported low-precision formats and benchmark latency and accuracy.

Is Maia 200 available now and where?

Microsoft has started to implement Maia 200 within Azure which they provide to users through their preview program. The initial deployment of the system will begin with designated Azure locations and company personnel, while the system will expand to additional users after it has reached its maximum capacity.

How does Maia 200 compare to chips from Amazon and Google?

Microsoft claims Maia 200 delivers about three times the FP4 throughput of Amazon’s Trainium Gen 3 and has FP8 performance above Google’s TPU v7, positioning it competitively among hyperscaler-designed accelerators for inference.

What are the main integration risks or trade-offs?

The project team needs to execute work on three specific tasks which include model quantization and runtime toolchain modifications and sharding methods. The process needs to verify accuracy through testing after quantization.

The team should create a system that enables different components to operate when specific model or pipeline requirements need GPU functions. The engineering tasks require thorough testing because they bring benefits only when projects operate at full capacity.

Will Maia 200 support popular ML frameworks?

Yes. The Maia SDK targets common frameworks such as PyTorch and integrates a Triton compiler to ease deployment. Microsoft is positioning the SDK to help teams convert and optimize models for the new hardware.

What should a team test first if they want to evaluate Maia 200?

Start by selecting one high-volume inference endpoint. Quantize a representative model, run end-to-end accuracy checks, measure token latency and throughput under realistic traffic, and use the cost calculator in the SDK to compare token economics against current GPU stacks.

Does Maia 200 affect vendor lock-in or cloud strategy?

It expands choices. Hyperscalers building first-party silicon provide more options for inference economics and negotiating leverage. Teams retain the ability to mix GPUs, TPUs, and Maia accelerators to match workload needs.

That said, changing runtimes and toolchains can introduce short-term lock-in risks that should be managed through abstraction and hybrid strategies.